I’m okay with game prices going up – they’ve fallen far behind inflation over the decades – though personally I favor DLC rather than one large shebang. Lower risk on both sides.

And there are a lot of games out there that, when including DLC, run much more than $100. Think of The Sims series or a lot of Paradox games. Stellaris is a fun, sprawling game, but with all DLC, it’s over $300, and it’s far from the priciest.

But if I’m paying more, I also want to get more utility out of what you’re selling. If a game costs $100, I expect to get twice what I get out of a competing $50 game.

And to be totally honest, most of the games that I really enjoy have complex mechanics and have the player play over and over again. I think that most of the cost that game studios want is for asset creation. That can be okay, depending upon genre – graphics are nice, music is nice, realistic motion-capture movement is nice – but that’s not really what makes or breaks my favorite games. The novelty kind of goes away once you’ve experienced an asset a zillion times.

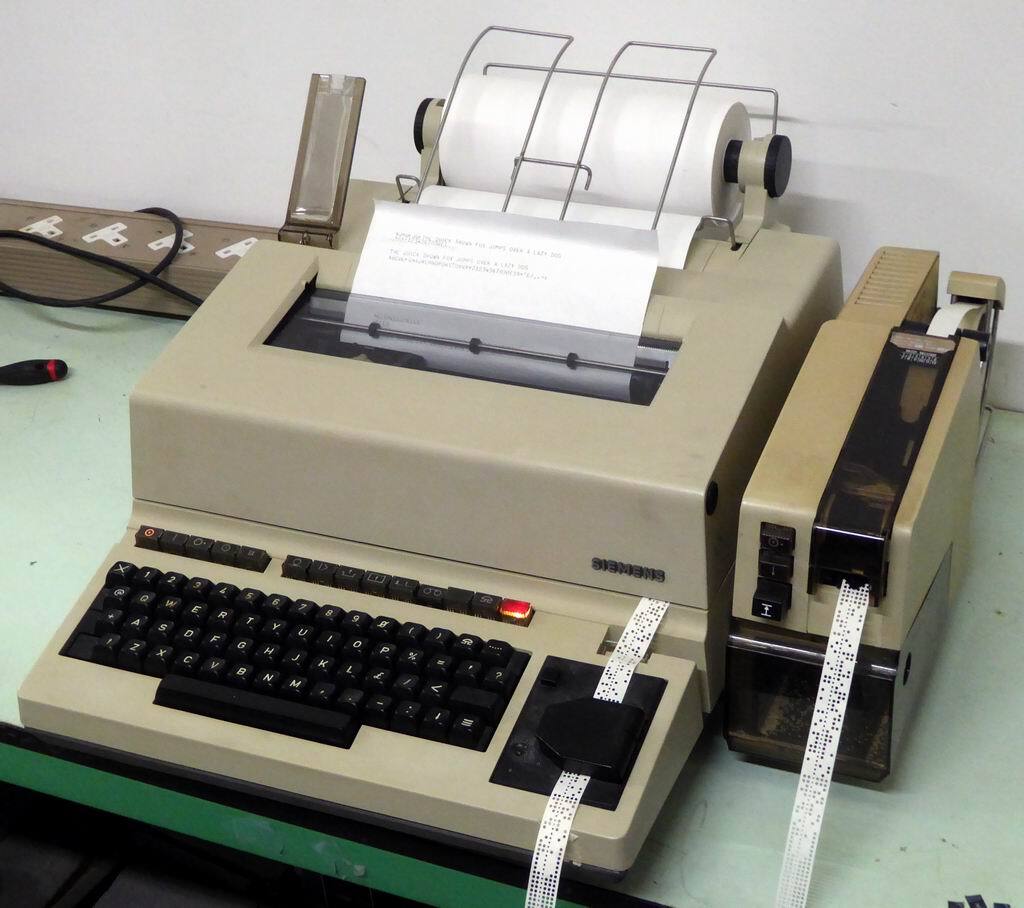

Smartphones – and to a lesser degree, tablets – kind of are not a phenomenal programming platform. Yeah, okay, they have the compute power, but most programming environments – and certainly the ones that I’d consider the best ones – are text-based, and in 2025, text entry on a touchscreen still just isn’t as good as with a physical keyboard. I’ll believe that there is room to considerably improve on existing text-entry mechanisms, though I’m skeptical that touchscreen-based text entry is ever going to be at par with keyboard-based text entry.

You can add a Bluetooth keyboard. And it’s not essential. But it is a real barrier. If I were going to author Android software, I do not believe that I’d do the authoring on an Android device.

I don’t know about this “going backwards” stuff.

I can believe that a higher proportion of personal computer users in 1990 could program to at least some degree than could the proportion of, say, users of Web-browser-capable devices today.

But not everyone in 1990 had a personal computer, and I would venture to say that the group that did probably was not a representative sample of the population. I’d give decent odds that a lower proportion of the population as a whole could program in 1990 than today.

I do think that you could make an argument that the accessibility of a programming environment somewhat-declined for a while, but I don’t know about it being monotonically.

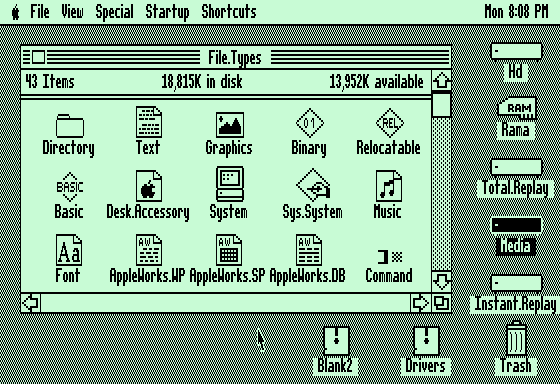

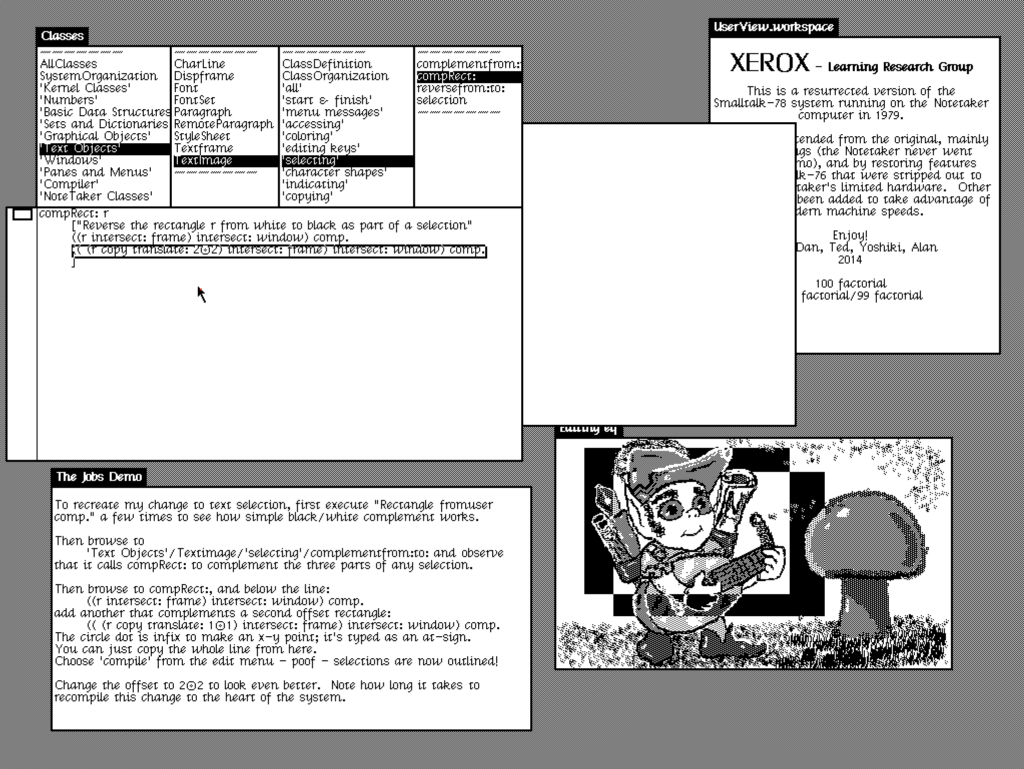

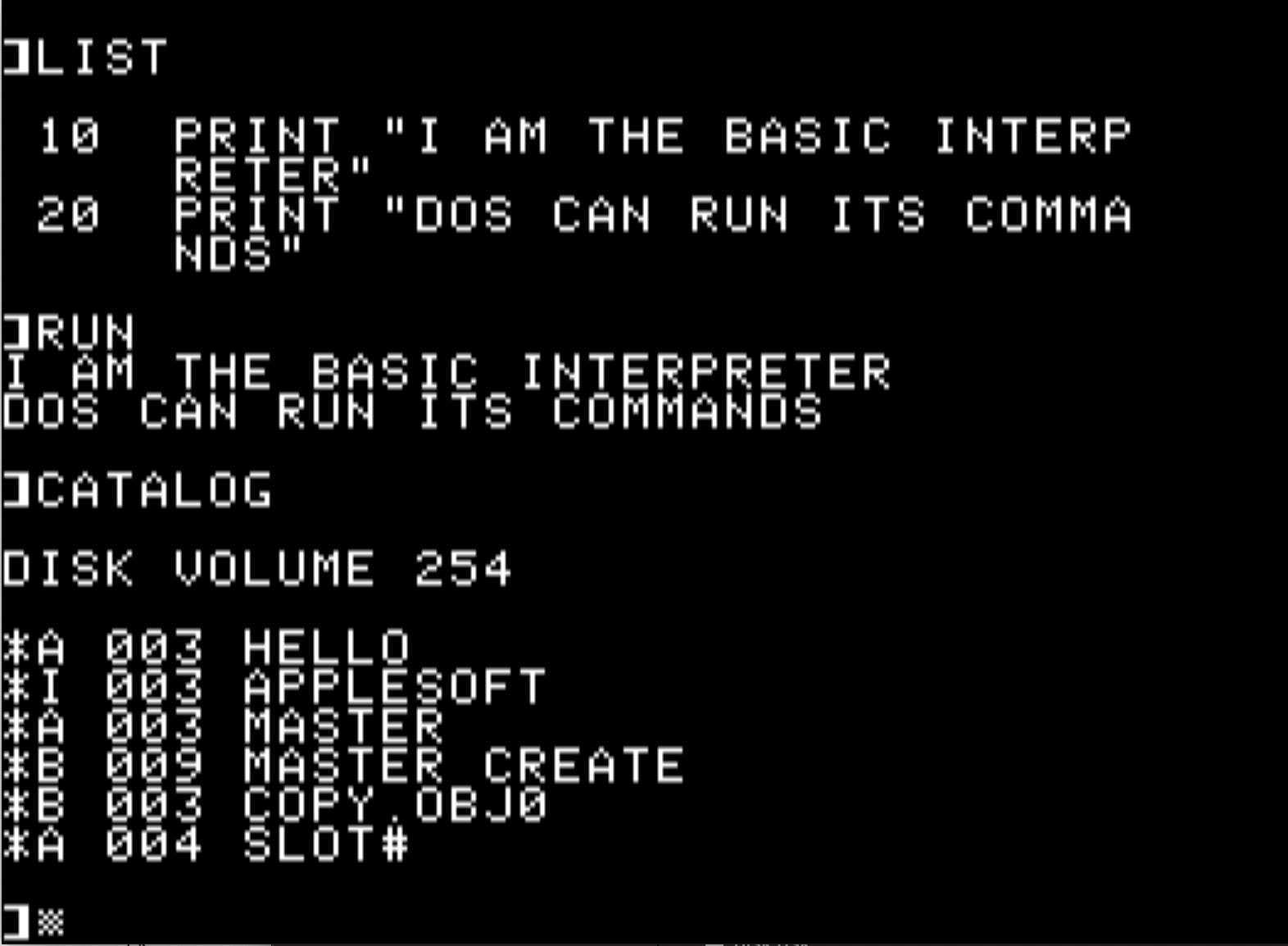

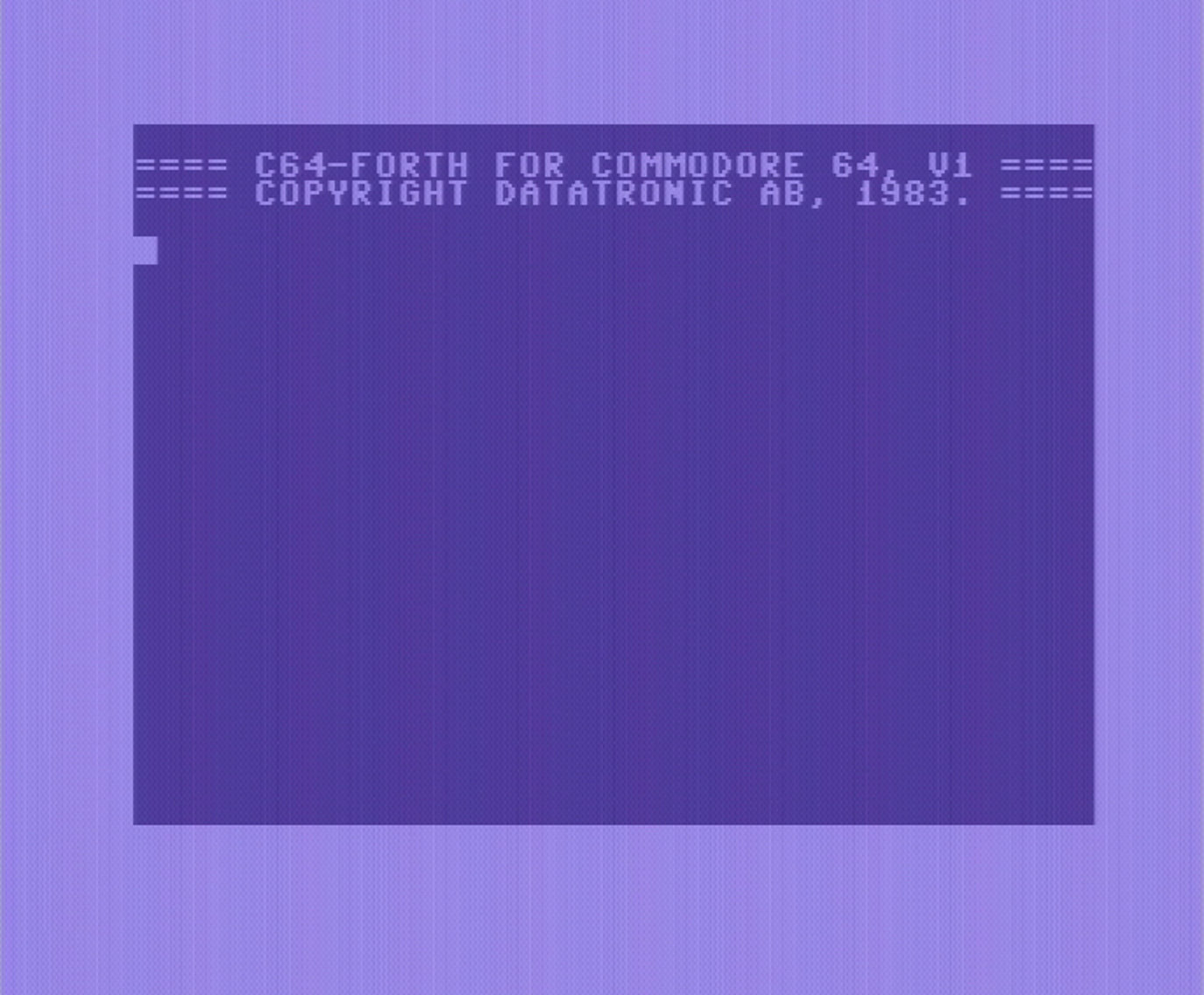

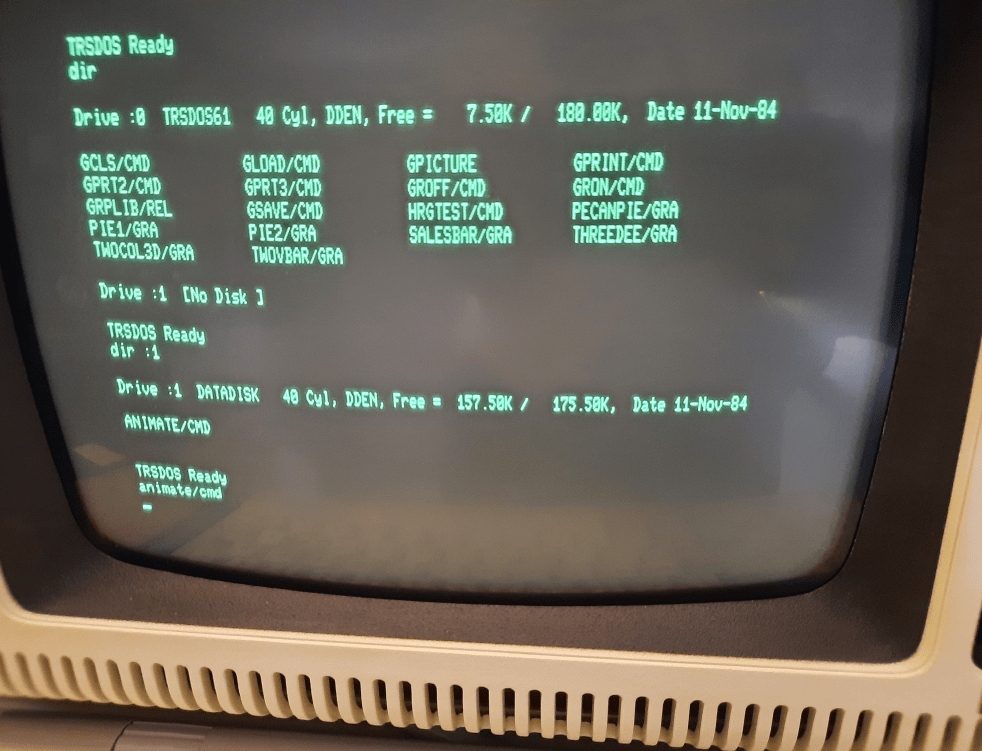

It was pretty common, for personal computers around 1980, to ship with some kind of BASIC programming environment. Boot up an Apple II, hit…I forget the key combination, but it’ll drop you straight into a ROM-based BASIC programming environment.

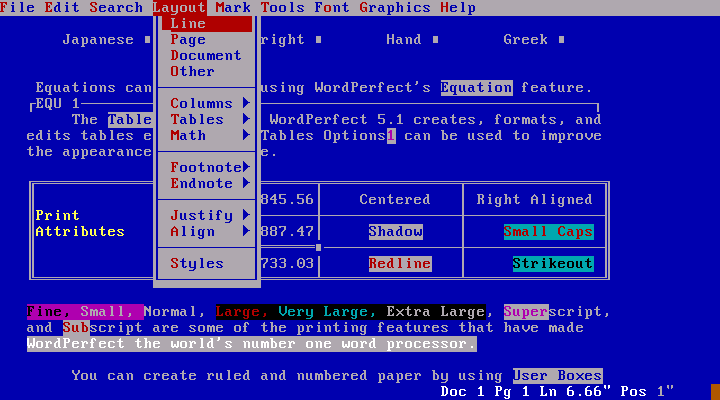

After that generation, things got somewhat weaker for a time.

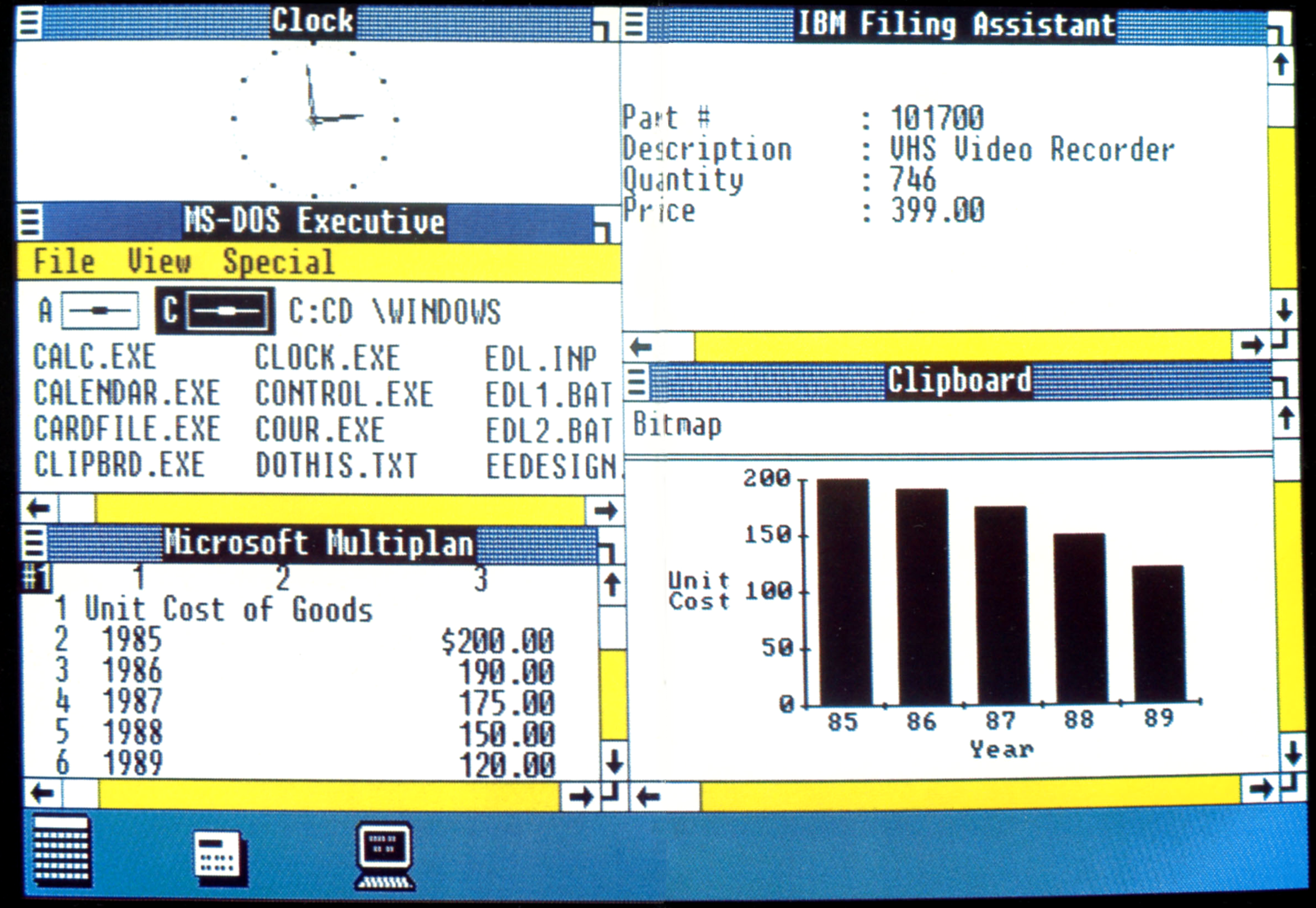

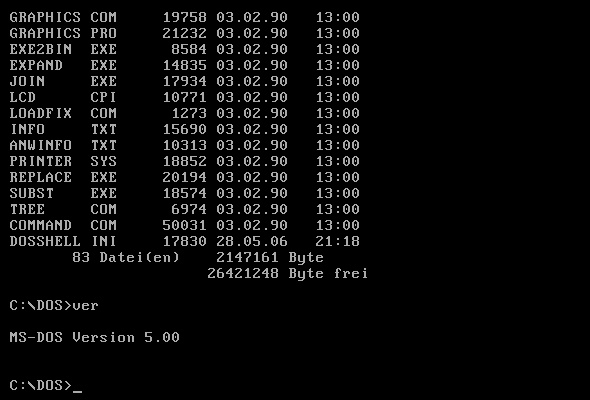

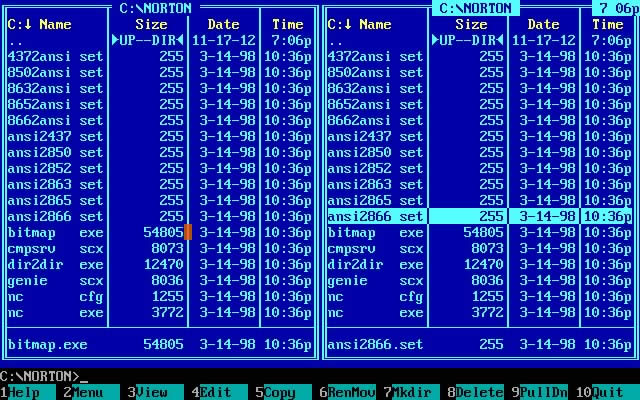

DOS had batch files. I don’t recall whether QBasic was standard with the OS. checks it did for a period with MS-DOS, but was a subset of QuickBasic. I don’t believe that it was still included by later in the Windows era.

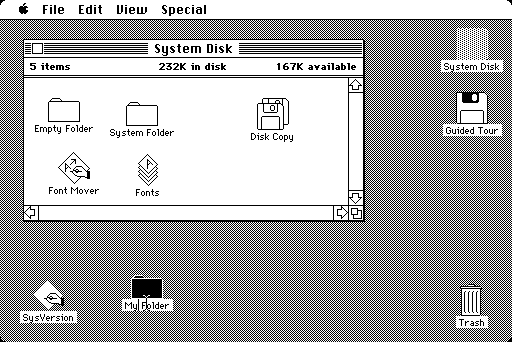

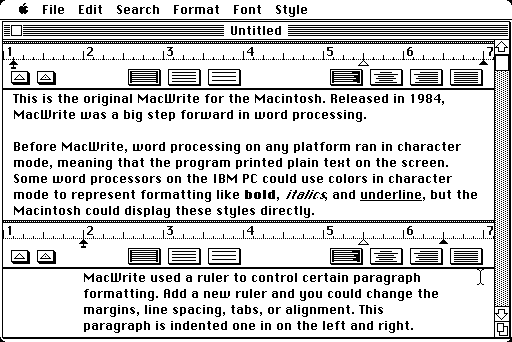

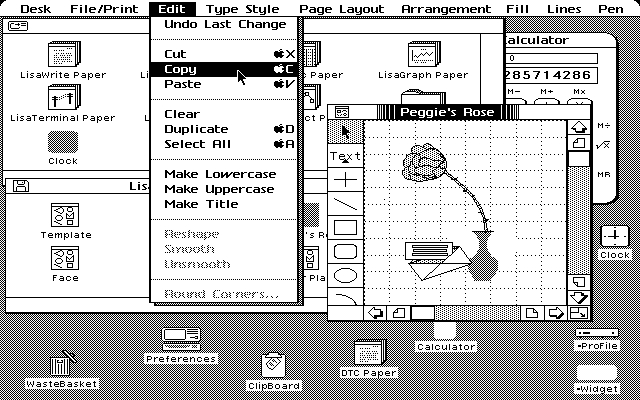

The Mac did not ship with a (free) programming environment.

I think that that was probably about the low point.

GNU/Linux was a wild improvement over this situation.

And widespread Internet availability also helped, as it made it easier to distribute programming environments and tools.

Today, I think that both MacOS and Windows ship with somewhat-more sophisticated programming tools. I’m out of date on MacOS, but last I looked, it had access to the Unix stuff via

brew, and probably has a set of MacOS-specific stuff out there that’s downloadable. Windows ships with Powershell, and the most-basic edition of Visual Studio can be downloaded gratis.